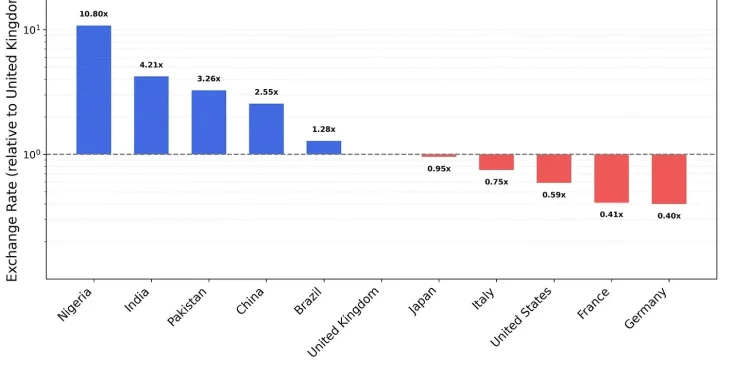

A chart has been circulating online claiming that Claude Sonnet 4.5, an AI model by Anthropic, assigns vastly different values to saving lives based on nationality. The chart allegedly shows that the model equates saving one Nigerian’s life to saving 27 Germans’, suggesting a bias that favours Africans over Europeans or Americans when resources are scarce.

Yet, despite the attention it has received, there is no evidence that this chart came from Anthropic or any verified evaluation. It remains a speculative piece of content, an example of how easily social media can blur the line between AI experimentation and misinformation.

The Rumour That Sparked a Global Debate

The claim appears to have started from user-generated screenshots showing a Claude Sonnet 4.5 output ranking the “moral value” of saving lives from different countries. These posts quickly spread across social media, fuelling heated debates about bias and fairness in AI reasoning.

Claude Sonnet 4.5 is a real model, officially released by Anthropic in late 2025. The company has highlighted improvements in safety, reasoning, and bias mitigation. However, none of its system cards or technical documentation includes any reference to moral weighting by nationality or to the alleged 1-to-27 ratio.

This absence of official data suggests the chart originated from individual experiments or speculative testing, not from Anthropic’s formal research.

Why the Story Feels Believable

The claim resonates because it touches on a well-documented challenge in artificial intelligence: the reproduction of social and cultural biases.

AI systems absorb human patterns from the massive datasets they are trained on. When asked to make moral trade-offs(such as choosing which life to save), they can unconsciously reflect societal hierarchies embedded in their data.

Studies have shown that models often show subtle preferences across nations or regions when simulating political or ethical scenarios. The alleged chart, while unverified, fits that pattern, making it believable even without proof.

The Holes Behind the Viral Graphic

Despite its plausibility, the claim falls apart under scrutiny.

-

No trace of official testing: Anthropic’s documentation makes no mention of nationality-based moral valuation.

-

Prompt-driven outcomes: Small changes in wording can radically alter a model’s response, making single outputs unreliable as evidence.

-

No independent replication: There are no verified experiments reproducing the supposed “1 : 27” ratio.

-

Unclear definitions: It is unknown how “value” was quantified in the chart.

-

Potential manipulation: The visual may have been edited or exaggerated to provoke outrage rather than report findings.

Without clear provenance or methodology, the “chart” stands on speculative ground.

When Speculation Exposes a Real Problem

Even if the chart proves to be fabricated or misinterpreted, the discussion it triggered remains valuable. It highlights how AI systems can mirror uneven moral frameworks.

If such patterns go unchecked, they could influence sensitive applications like triage systems, global aid distribution, or resource planning. The issue is not just whether the chart is real, but whether the underlying possibility it represents has been properly audited.

What the Controversy Reveals About Us

The story of the “1 Nigerian = 27 Germans” chart ultimately reveals more about human perception than about the AI itself. In the rush to expose or defend technology, unverified claims can gain traction faster than facts.

This episode highlights the importance of critical literacy in the AI era, where screenshots can travel faster than science and speculation can appear as evidence.

Until credible replication or official data emerges, the chart remains an internet artefact, a cautionary tale about how easily we project human fears and biases onto the systems built to reflect us.