TikTok has announced new tools that allow users to control the amount of AI-generated content displayed in their feed. Additionally, the platform now uses invisible watermarks to track AI videos. It is now harder for fake content to deceive users.

These updates address growing concerns about AI content flooding social feeds. As companies like Meta and OpenAI push AI-only platforms, TikTok takes a different path by giving users more control.

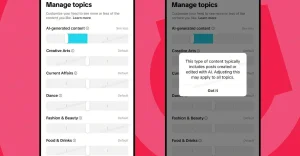

Users Can Now Filter AI Content From Their Feed

TikTok is testing a new feature that lets users adjust the level of AI-generated content in their “For You” feed. This control is integrated into the existing “Manage Topics” tool, which already handles categories like Dance, Sports, and Food.

The feature works like a slider. Users who love AI history content can see more of it. Those tired of AI videos can reduce them significantly.

“People who love AI-generated history content can see more of this content, while those who’d rather see less can choose to dial things down,” TikTok explained in their official announcement.

AI-generated videos have flooded TikTok since OpenAI launched Sora in September. Many creators now use AI tools to make visuals for history lessons, celebrity content, and other topics.

Invisible Watermarks Make AI Content Harder to Hide

TikTok also introduced “invisible watermarking” technology to better identify AI content. These digital fingerprints stay hidden inside videos and can’t be easily removed.

Current labelling systems have a major flaw. People often strip away visible watermarks or metadata when they repost content on different platforms. This makes it hard to track AI-generated videos as they spread across the internet.

The new invisible watermarks solve this problem. Only TikTok can read these digital signatures, making them much harder to remove. The company will add these watermarks to content created using TikTok tools like AI Editor Pro.

TikTok already uses C2PA Content Credentials, an industry standard that embeds metadata into media files. The platform has labelled over 1.3 billion videos using various detection methods. However, these labels often disappear when content gets edited or reuploaded elsewhere.

TikTok Invests $2 Million in AI Education

The platform announced a $2 million fund to teach users about AI literacy and safety. This money goes to experts like Girls Who Code to create educational content that appears in users’ feeds.

The fund includes over 20 experts across more than a dozen global markets. These creators will make videos that help people understand AI technology and spot potentially harmful content.

Partnership Efforts Expand Detection Technology

TikTok joined the Partnership on AI as a sponsor and participates in two steering committees focused on the impact of AI on enterprise and human connection. The company has been a founding member of the organization’s Framework for Responsible Practices for Synthetic Media since 2023.

This collaboration enables TikTok to stay current with industry best practices as AI detection technology continues to evolve. The company regularly updates its approach in response to expert guidance and changing industry standards.

The Battle Against AI Detection Limits

Invisible watermarks face real challenges. Recent research suggests that all current watermarking systems can be broken with enough effort. Extreme editing, format changes, or specific attacks can destroy these digital signatures.

However, TikTok believes layered defenses work better than single solutions. The platform combines creator labelling, automated detection, C2PA credentials, and now invisible watermarks to catch AI content from multiple angles.

Rolling Out Across the Platform

Both features will be launched over the coming weeks. Users can access the AI content control through Settings > Content Preferences > Manage Topics. The invisible watermarking starts with TikTok’s own AI tools before expanding to other content.

TikTok promises to keep updating these systems as technology and industry standards evolve. The company maintains strict policies against harmful AI content while supporting creative uses of the technology.